Have you ever tried to scrape data from a website and found yourself blocked or faced with captchas and IP bans? This is a common challenge web scrapers face, but luckily, there’s a solution: Python web scraping proxy. Proxies act as intermediaries between the scraper and the website, allowing the scraper to appear as a different IP address and bypassing the website’s restrictions.

In this blog, we’ll dive into how to set up a web scraping proxy in Python and why it’s necessary. By the end, you’ll be equipped with the knowledge to overcome these restrictions and scrape the data you need.

What Is a Proxy?

A proxy server acts as an intermediary between a client and a web server, allowing the client to access the server indirectly. In a web scraping project, a proxy server can help us mask the IP address of the scraper and make it appear as though the request is coming from a different location.

This is important because some websites might have restrictions or rate limits on requests from a particular IP address. By using a proxy, the scraper can avoid these limitations and continue scraping without interruption.

Several types of proxies for web scraping are available, each with advantages and disadvantages. The most common types of proxies used in web scraping are HTTP, HTTPS, SOCKS4, and SOCKS5.

HTTP and HTTPS proxies are suitable for web scraping tasks that involve retrieving data from websites.

SOCKS4 and SOCKS5 proxies, on the other hand, are better suited for tasks that require the use of protocols other than HTTP, such as FTP or SMTP.

Additionally, SOCKS5 proxies offer more security and functionality compared to SOCKS4 proxies.

How to Find a Reliable Proxy Provider?

When it comes to finding a reliable proxy provider, there are several factors to consider.

First, you should look for a provider that offers a wide range of proxy types, including data center proxies, residential proxies, and mobile proxies.

You should also ensure that the provider has a large pool of proxies, as this will ensure that you have an excellent selection to choose from.

Additionally, the provider should offer fast and reliable proxies with low latency and high uptime.

Finally, you should consider the provider’s pricing, customer support, and any additional features they offer, such as API access or session control.

The most recommended proxy services include Oxylabs, Luminati, Smartproxy, and Geosurf. These providers offer a range of proxy types, including residential and mobile proxies, and have large proxy pools with low latency and high uptime. However, it’s always essential to do your research and choose proxy providers that best suits your specific needs and budget.

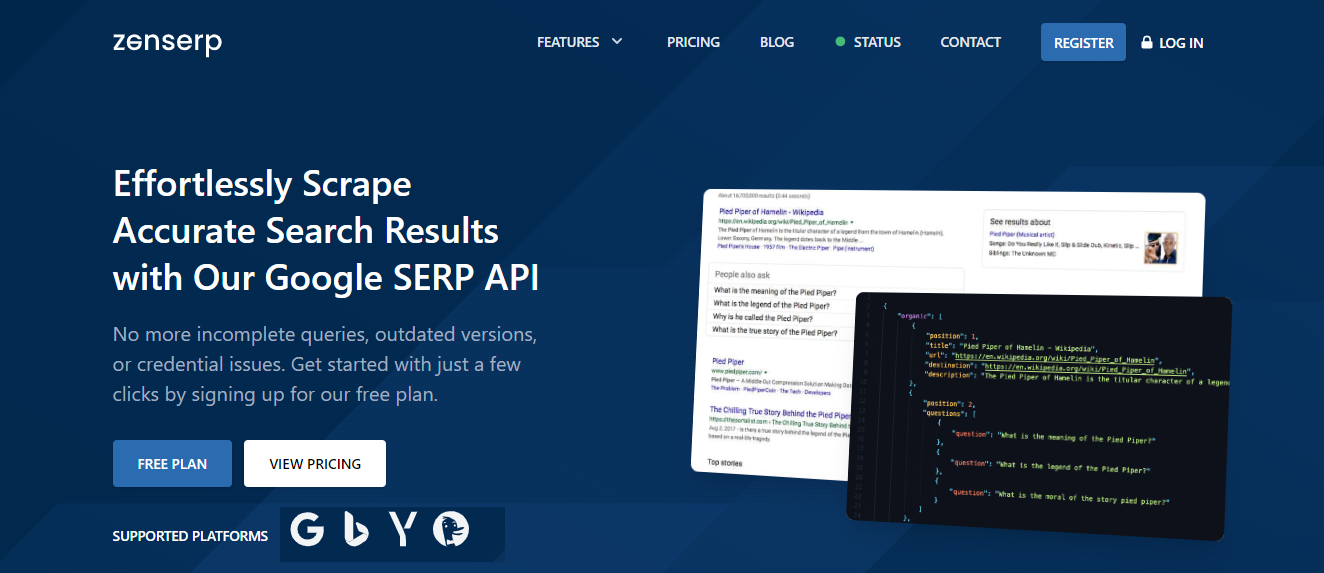

Is Zenscrape a Proxy Provider?

Zenscrape is not a proxy provider, but it is a web scraping API that allows developers to extract search engine data from Google, Bing, Yahoo, and other search engines. It offers various features, including SERP scraping, keyword search, and data extraction.

Zenscrape uses its proxy pool to enable users to bypass IP blocks, geo-restrictions, and other web scraping obstacles. By using Zenscrape’s API, developers can access data in a structured format, making it easy to integrate into their applications.

How Do You Set up a Proxy in Python?

To set up a proxy rotation in Python, we can use the ‘requests’ library, which is a popular HTTP library for Python. The first step is to install the ‘requests’ library using pip. We can install it by running the following command in the terminal or command prompt: ‘pip install requests’.

Once we have installed the ‘requests’ library, we can start setting up a proxy in Python. The first step is to import the ‘requests’ library in our Python script using the following command: ‘import requests’.

Next, we need to define our proxy settings using a dictionary. The dictionary should include the proxy’s URL and port number. For example, if the proxy’s URL is ‘http://example.com‘ and the port number is ‘8080’, we can define the proxy settings as follows:

proxy = {

'http': 'http://example.com:8080',

'https': 'https://example.com:8080'

}We then use the ‘requests’ library to make HTTP requests through the proxy. We can do this by passing the ‘proxies’ parameter with the proxy settings to the ‘get’ method, as shown in the following code example:

import requests

# Define proxy settings

proxy = {

'http': 'http://example.com:8080',

'https': 'https://example.com:8080'

}

# Make HTTP request through proxy

response = requests.get('http://www.example.com', proxies=proxy)

# Print response content

print(response.content)This is a simple example of how to set up a proxy in Python using the ‘requests’ library. We can modify the proxy settings based on our requirements, such as adding authentication or using a different protocol.

How to Test the Proxy Connection?

To test the rotating residential proxies connection, we can use the ‘requests’ library. It can help us to make a test request to a website while passing the proxy settings in the request. If the response is successful, it means the proxy connection is working correctly. Otherwise, we may need to check the proxy settings and try again.

Conclusion

Setting up a proxy in Python is crucial in web data scraping as it allows us to access websites that might restrict access to their content. With the ‘requests’ library, we can easily set up a proxy and make HTTP requests through it. However, we need to ensure that the proxy settings are correct and that we can troubleshoot errors if they occur.

Using proxies in web scraping can also help to protect our IP address from being blocked or blacklisted by websites. It enables us to scrape data without getting detected and blocked, making it a valuable tool in data extraction.

FAQs

Do I Need a Proxy for Web Scraping?

Using a proxy for web scraping can be useful for avoiding IP blocking and accessing restricted content.

What Proxy to Use for Web Scraping?

Rotating or residential proxy is recommended for the web scraping process to avoid detection and IP blocking.

How Do You Use a Proxy When Scraping a Website?

Pass proxy settings to the ‘requests’ library when making HTTP requests to the website.

Is Proxy Better Than VPN for Scraping?

Both scraping proxies and VPNs can be effective for web scraping. But which one is better depends on the specific requirements of the scraping project.

Start using Zenserp today and unlock the power of reliable and scalable SERP data for your business!